# Setup Single Sign-On sidecar

A solution to protect your deployed app and without modifying your codebase could be to add a sidecar which supports the authentication flow to an OIDC provider. In the example below we demonstrate how this can be achived.

# Configurations steps

On Keycloak we need to use a new or an existing OAuth client with some configurations.

A kubernetes deplyoment consisting of a "nginx" and an additional "oauth2-proxy" container.

As OIDC provider keycloak will be used.

Created and used client on keycloak: oauth2-proxy

The application named "prodapp" will be acessible with the url: https://prodapp.ci.kube-plus.cloud

Expected behaviour: On accessing the URL and if the user is not already logged in from another/valid session (SSO) then the authentication process will take place.

Project "oauth2-proxy": https://github.com/oauth2-proxy/oauth2-proxy (opens new window)

Configuration of the oauth2-proxy: Reference oauth2-proxy options (opens new window)

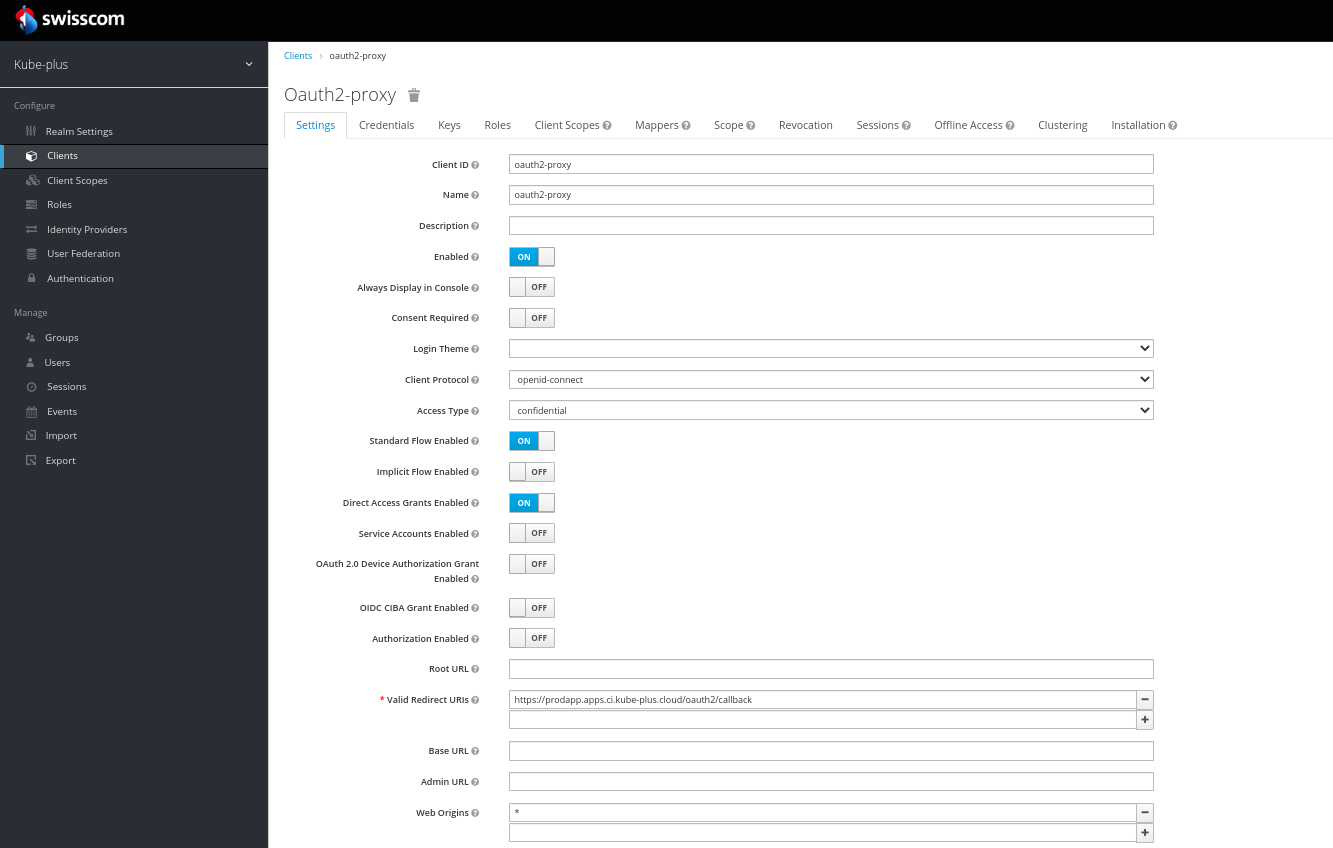

# The client setup on keycloak

For this how-to example we set up a dedicated OIDC client "oauth2-proxy" in the kube-plus realm. It is a minimalist configuration and only to show what is neccessary to setup the SSO experience. In real production environments more specific settings are needed to distinguish who can access a resource.

# Definitions oauth2-proxy client

# Client: redirect URI.

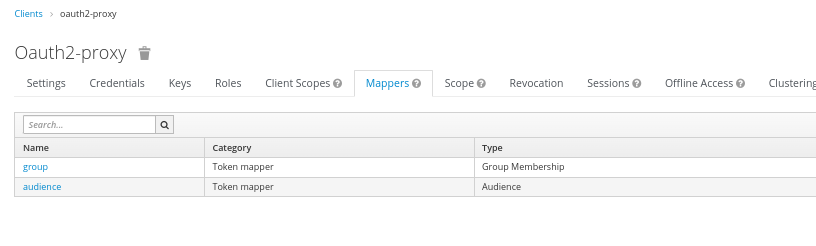

# Client:mappers: overview Group and Audience

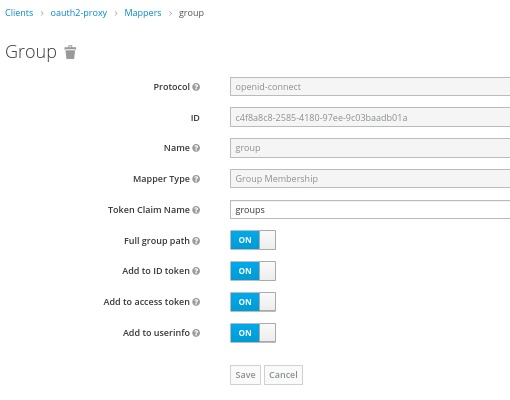

# Client:mappers: Token Claim Name groups and Type Group Membership

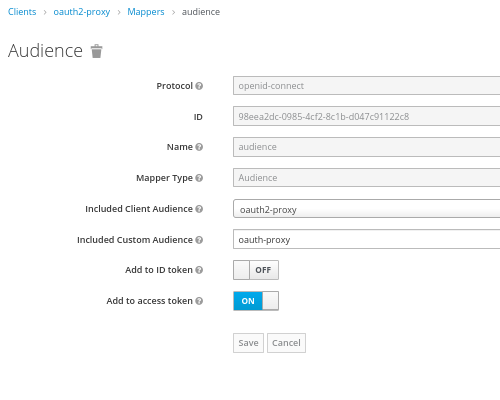

# Client:mappers: Type Audience and oauth-proxy

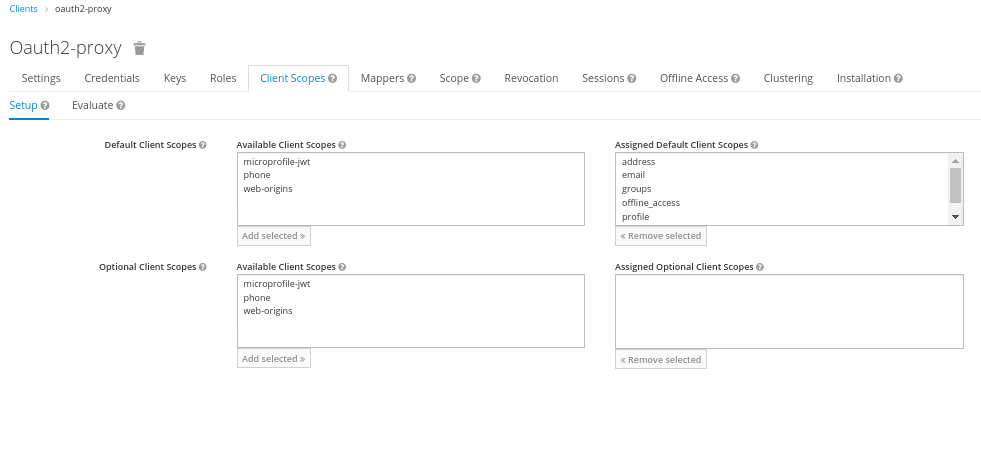

# Client:client scopes:

# The kubernetes deployment

Below the content of the used manifest within the file "prodapp-oauth2.yaml".

With this we deploy the nginx and oauth2-proxy.

The oauth2-proxy will get specific configurations with the 'args' and secrets will be consumed from "ENV".

#namespace

---

apiVersion: v1

kind: Namespace

metadata:

labels:

kubernetes.io/metadata.name: authapps

name: authapps

#service. In this case we define the oauth2-proxy as request handler. See argument "upstream" on oauth2-proxy deployment further below.

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/name: prodapp

name: prodapp-oauth2-proxy

namespace: authapps

spec:

ports:

- name: auth

port: 4180

protocol: TCP

targetPort: 4180

selector:

app.kubernetes.io/name: prodapp

type: ClusterIP

#secrets for the serviceaccount oauth2-proxy and some needed tls certificates

---

apiVersion: v1

kind: Secret

metadata:

name: oauth2-proxy

namespace: authapps

stringData:

OAUTH2_PROXY_CLIENT_ID: oauth2-proxy #new keycloak client used for the How-To

OAUTH2_PROXY_CLIENT_SECRET: <<redacted>> #secret of the keycloak client

OAUTH2_PROXY_COOKIE_SECRET: 1KilodeD4qFD_ym1MwikUQo3EWkbD58ltQ3RurhN0N0= #random. Genereate one e.g. with bash: dd if=/dev/urandom bs=32 count=1 2>/dev/null | base64 | tr -d -- '\n' | tr -- '+/' '-_'; echo

---

apiVersion: v1

kind: Secret

metadata:

annotations:

replicator.v1.mittwald.de/replicate-from: cert-manager/ca-bundle-kube-plus

replicator.v1.mittwald.de/replicated-from-version: "0"

argocd.argoproj.io/compare-options: IgnoreExtraneous

labels:

app: prodapp

app.kubernetes.io/instance: prodapp

name: ca-bundle-kube-plus

namespace: authapps

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

annotations:

replicator.v1.mittwald.de/replicate-from: cert-manager/ca-bundle-swisscom

replicator.v1.mittwald.de/replicated-from-version: "0"

argocd.argoproj.io/compare-options: IgnoreExtraneous

labels:

app: prodapp

app.kubernetes.io/instance: prodapp

name: ca-bundle-swisscom

namespace: authapps

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

annotations:

replicator.v1.mittwald.de/replicate-from: cert-manager/ca-bundle-letsencrypt-staging

replicator.v1.mittwald.de/replicated-from-version: "0"

argocd.argoproj.io/compare-options: IgnoreExtraneous

labels:

app: prodapp

app.kubernetes.io/instance: oauth2-proxy

name: ca-bundle-letsencrypt-staging

namespace: authapps

type: Opaque

#service account used by oauth2-proxy

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: oauth2-proxy

namespace: authapps

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: auth:oauth2-proxy

rules:

- apiGroups:

- ""

resources:

- secrets

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: auth:oauth2-proxy

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: auth:oauth2-proxy

subjects:

- kind: ServiceAccount

name: oauth2-proxy

namespace: authapps

#deployment nginx and oauth2-proxy

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: prodapp

namespace: authapps

spec:

selector:

matchLabels:

app.kubernetes.io/name: prodapp

template:

metadata:

labels:

app.kubernetes.io/name: prodapp

spec:

containers:

- name: nginx

image: nginx

ports:

- name: http

containerPort: 80

resources:

requests:

cpu: 10m

memory: 100Mi

limits:

cpu: 200m

memory: 500Mi

- name: oauth2-proxy

image: quay.io/oauth2-proxy/oauth2-proxy:v7.3.0

args:

- --provider=keycloak-oidc

- --cookie-secure=true

- --cookie-samesite=lax

- --cookie-refresh=1h

- --cookie-expire=4h

- --cookie-name=_oauth2_proxy_auth

- --set-authorization-header=true

- --email-domain=*

- --http-address=0.0.0.0:4180

- --upstream=http://localhost:80/

- --skip-provider-button=true

- --whitelist-domain=*.ci.kube-plus.cloud

- --oidc-issuer-url=https://auth.ci.kube-plus.cloud/auth/realms/kube-plus

env:

- name: OAUTH2_PROXY_CLIENT_ID

valueFrom:

secretKeyRef:

name: oauth2-proxy

key: OAUTH2_PROXY_CLIENT_ID

- name: OAUTH2_PROXY_CLIENT_SECRET

valueFrom:

secretKeyRef:

name: oauth2-proxy

key: OAUTH2_PROXY_CLIENT_SECRET

- name: OAUTH2_PROXY_COOKIE_SECRET

valueFrom:

secretKeyRef:

name: oauth2-proxy

key: OAUTH2_PROXY_COOKIE_SECRET

ports:

- containerPort: 4180

name: auth

protocol: TCP

volumeMounts:

- mountPath: /etc/ssl/certs/ca-bundle-letsencrypt-staging.crt

name: ca-bundle-letsencrypt-staging

subPath: ca.crt

- mountPath: /etc/ssl/certs/ca-bundle-swisscom.crt

name: ca-bundle-swisscom

subPath: ca.crt

- mountPath: /etc/ssl/certs/ca-bundle-kube-plus.crt

name: ca-bundle-kube-plus

subPath: ca.crt

resources:

requests:

cpu: 10m

memory: 100Mi

limits:

cpu: 100Mi

memory: 500Mi

serviceAccountName: oauth2-proxy

volumes:

- name: ca-bundle-letsencrypt-staging

secret:

optional: false

secretName: ca-bundle-letsencrypt-staging

- name: ca-bundle-swisscom

secret:

optional: false

secretName: ca-bundle-swisscom

- name: ca-bundle-kube-plus

secret:

optional: false

secretName: ca-bundle-kube-plus

#ingress and lets encrypt certificate

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

# these annotations will give us automatic Lets-Encrypt integration with valid public certificates

cert-manager.io/cluster-issuer: letsencrypt-contour

ingress.kubernetes.io/force-ssl-redirect: "true"

kubernetes.io/ingress.class: contour # "contour" is the default ingress class you should use

kubernetes.io/tls-acme: "true"

name: prodapp-ingress

namespace: authapps

spec:

tls:

- hosts:

- prodapp.ci.kube-plus.cloud

secretName: prodapp-tls

rules:

- host: prodapp.ci.kube-plus.cloud

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: prodapp-oauth2-proxy

port:

number: 4180

Deploying this manifest against the target kube+ cluster.

kubectl -f apply prodapp-oauth2.yaml

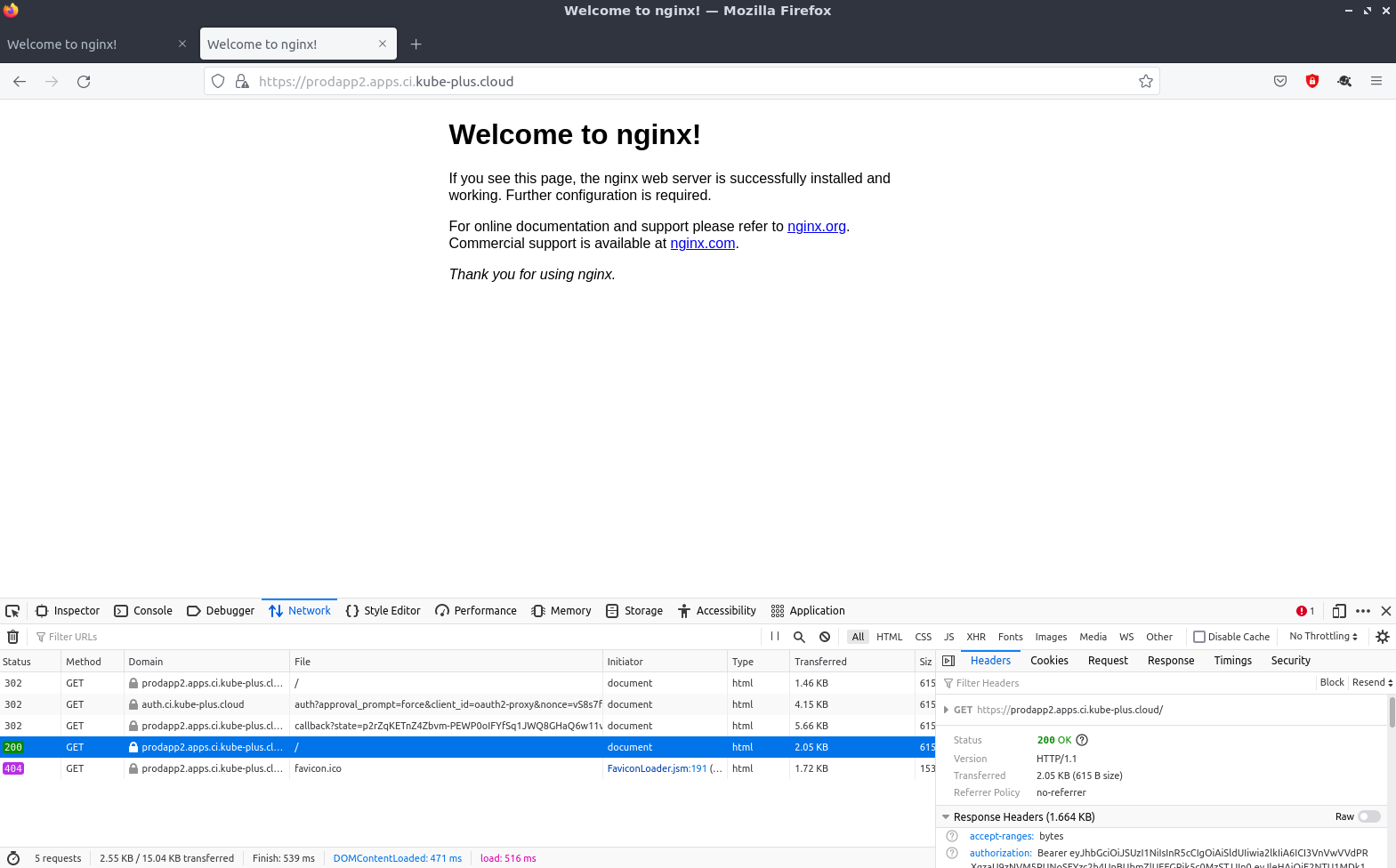

# Checking authentication login and SSO

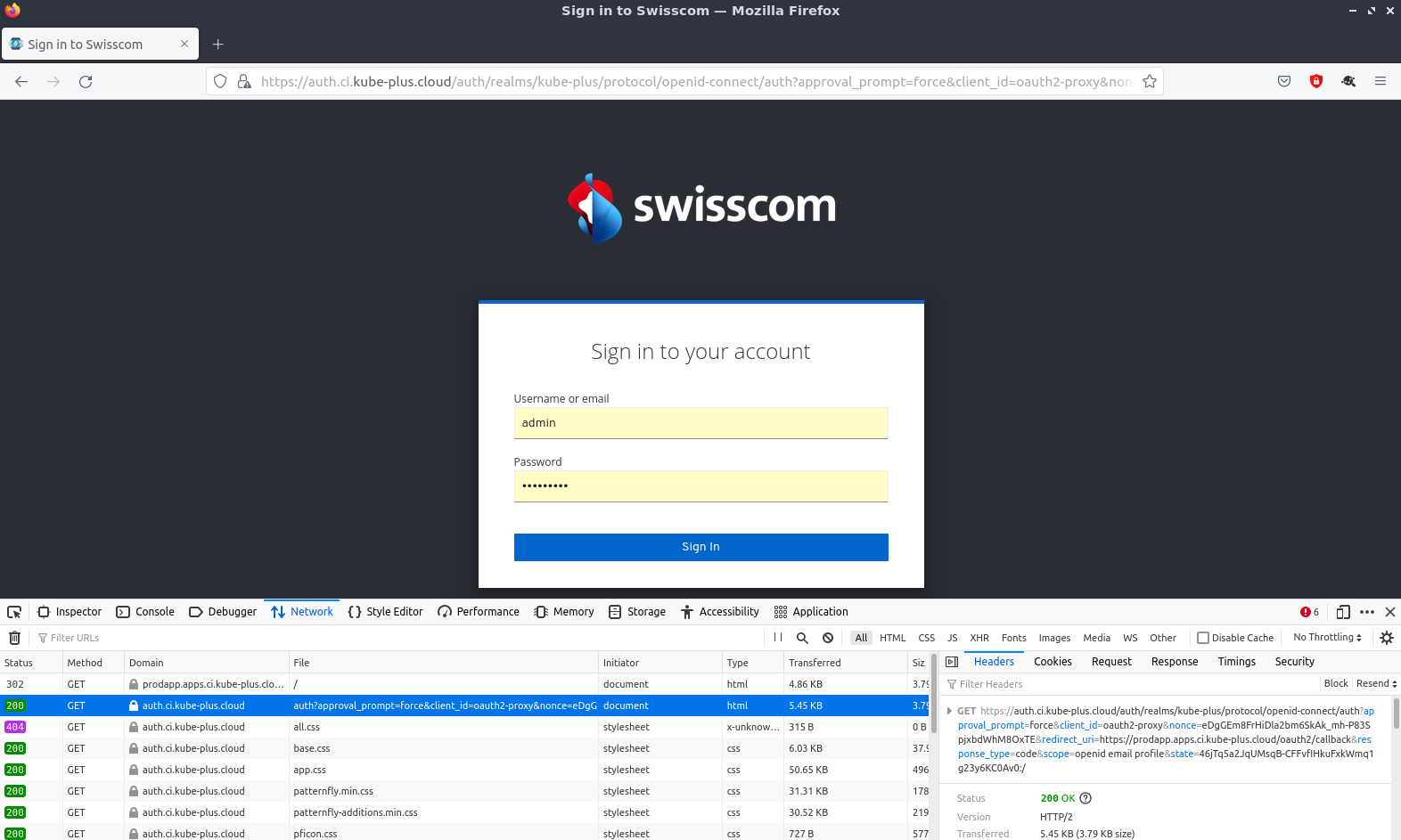

# Check login prompt

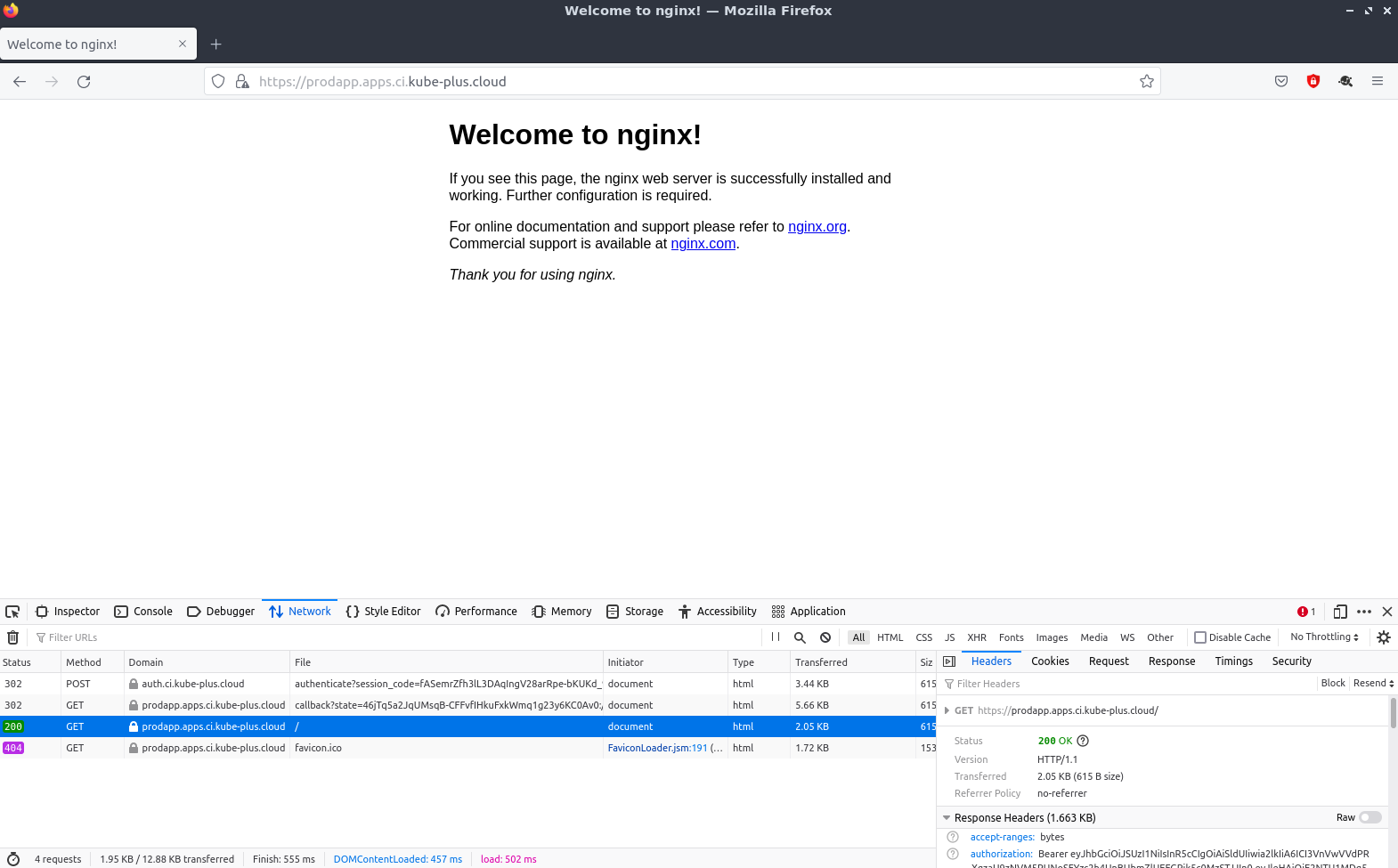

Requesting the url https://prodapp.ci.kube-plus.cloud/ in a browser.

If not yet autohrized it redirects and the expected login page appears.

Since we did not specifically configure permissions on keycloak for the used client we can login with the existing keycloack user (admin or kube-plus).

After the login succeeded we are back on the default welcome page of nginx.

# Check SSO

We now copy the existing yaml file and replace the names within the file from prodapp to prodapp2.

We deploy the copied and adjusted manifest with kubectl.

At last we adjust the "oauth2-client" on keycloak to allow the new redirect URI 'https://prodapp2.ci.kube-plus.cloud/oauth2/callback' also.

We can access the other app (dedicated nginx/oauth2-proxy) without to login again. SSO Success.

In this case we used the same OAuth keycloak client "oauth2-proxy" for both apps.

For the SSO experience it would not have an impact on using another OAuth client with similar configurations.